Give a man a fish and you feed him for a day.

Teach a man to fish and one day he may invent tuna teriyaki.

– proverb

Audio plug-ins are an essential part of the producer’s toolkit; we use them to shape sound the way a woodworker uses a lathe or a plane to shape wood. And as any woodworker will tell you, the ability to fashion your own tools, to suit your own needs, is a mighty handy skill to own.

But programming audio plug-ins, let’s face it, is not a casual undertaking. To even begin playing the game you need a solid grounding in digital signal processing (DSP) along with some serious C, C++ and math chops, all tucked neatly into your belt. And that’s before you start diving into the frameworks of whichever platform it is you’re developing for. Wouldn’t it be neat if there was a kinder, gentler way into this world of streaming ones and zeros, even for reluctant programmers?

Well there is, at least for Mac users: it’s called SuperColliderAU and it’s part of SuperCollider, the powerful open source audio programming environment.

It goes like this: you download and install SuperCollider, roll through a couple of tutorials to get your bearings, start poking around the help files for the included DSP unit generators (UGens, in SuperCollider-speak), string a couple of these UGens together into a working algorithm and bang! generate an Audio Unit plug-in that you can load into your favorite AU-supporting host application. With real time MIDI control. Just like that.

Ok, I’m simplifying a little bit. But in many ways SuperColliderAU is a perfect bridge between having a plug-this-into-that DIY environment like Max/MSP (LEGO bricks, if you will) and working directly with the Apple Core Audio frameworks (Rocket Science.) With much of the scaffolding removed from view, one can – in relatively short order – reach a point where hooking things up this way or that just for the heck of it becomes quite comfortable. In the process you get to learn a little something about DSP and a little something about how audio plug-ins work under the hood. Plus you’re on your way to assembling a personal audio toolbox you can actually use in your production workflows. Now, ain’t that a win-win-win?

Yeah, But…

Right, so I’ve got your interest. But you’re also thinking: if this SuperColliderAU stuff is so hot, how come it’s also, like, so unknown? Who’s using this thing? Where’s the extensive documentation? Where are the legions of tutorial websites? “Where,” you ask, “is The Great International SuperColliderAU Audio-Unit-Sharing Community?”

And the truth is: we’ve got us a few roadblocks.

Gerard Roma, the creator of SuperColliderAU (and the AudioUnitBuilder utility we’ll get to later in this post), turned the project over to the SuperCollider development community some years ago. But although SCAU was officially incorporated into the SuperCollider code base back in 2009 it has yet – as of mid-2012 – to receive any sort of significant public update.

Also – I know not why – the SuperColliderAU component has never been included with binary distributions of SuperCollider (though it is my understanding that this will change in the future.) Now, if you don’t know binary from schminary: binary distributions are those single, pre-built ‘files’ – they’re actually directories made to look like files – that you download, drop into your Applications folder and launch by double-clicking; in other words, binaries are what most Mac users are used to dealing with when they download and install a new application.

So, there’s roadblock #1: to get SuperColliderAU, you need to build SuperCollider – the whole application – from source code. Fine if you’re comfortable doing that sort of thing, highly intimidating if you’re not. And right behind roadblock #1 comes roadblock #2: because the source code for SuperColliderAU has not been updated in several years, getting it to build at all on any moderately recent Mac OS is – even for old hands – a trick indeed.

In fact – as of SuperCollider 3.5 – it’s no longer possible to build the component from source, period!

We’re going to fix this.

In the original version of this series, we focused on modifying the SuperCollider Xcode project files in order to get a working SCAU component. Since that option is now a dead end – the Xcode project files themselves are no longer being maintained – we’re going to shift tactics: first, I’ll provide you with an already-built [Download not found]; then we’ll skip the Xcode project modifications that formerly constituted Part 2 and move right on to building our first plug-in. Doesn’t matter if you’re running Snow Leopard, Lion or Mountain Lion.

But before we dive into building, let’s first try to gain some insight into how the various pieces that make up this excellent tool fit together. To that end, we’re going to briefly skim through four related topics: Audio Units, SuperCollider, SuperColliderAU and the AudioUnitBuilder, more or less in that order. Then, in Part 2, we’ll look at some sample code and walk through building our first audio effect plug-in.

That way, next time, you get to go fishing on your own.

Audio Units

The Audio Unit framework is Apple’s standard for creating audio plug-ins in OS X. Functionally, it is the equivalent of Steinberg’s popular VST application interface (except that VST is cross-platform whereas Audio Units are not.)

This isn’t the place to dig deeply into the nuts and bolts of Audio Units – Apple’s Audio Unit Programming Guide is where you want to go for that – but there are a couple of points worth summarizing for the sake of this discussion:

When we set out to process digital audio in real time, it usually means we’re going to be working with streams of audio; that is, sequences of ones and zeros which – when fed through a Digital-to-Analog converter – reconstitute a time-varying electrical signal that can be amplified and reproduced as sound.

Audio streams are typically processed in blocks (that is, the stream is broken down into chunks of a manageable length) and passed through an audio processing graph which is — just like it says — where the actual processing of audio takes place. The ‘graph’ bit comes into play because what we’re doing is chaining a group of processes together in series, like… well, like a graph. For a device that processes external audio, that usually means an input process at one end, an output process at the other and lots of action in the middle.

It can be useful to think of an audio graph as a series of black boxes, each contributing its bit of processing to the incoming stream and passing the result on to the next ‘box’.

In addition to the various tasks associated with setting up the graph and synchronizing (not to mention processing) incoming and outgoing blocks of audio, Audio Units also need to be able to identify themselves to host applications, specify channel layouts, display a graphical user interface, handle the routing of real-time control changes and so on. In other words, there is an impressive collection of complex behavior involved in building one of these suckers.

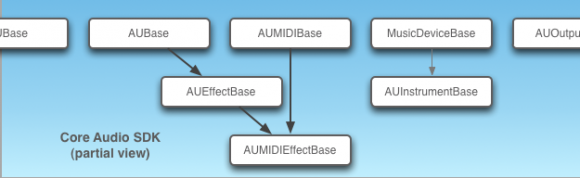

To address this level of complexity, Apple provides us with a software developers’ kit – the Core Audio SDK – which pre-defines a set of classes for building different types of Audio Units. Now, a ‘class’ – if this is completely new to you – can be thought of as a kind of abstract prototype or template, not unlike its meaning in Biology. Individuals who belong to a class share features common to the whole class, but within a given class we may have subclasses that bring in additional, specialized features or characteristics. And subclasses may have other subclasses.

For example, if a Core Audio SDK class has some particular functionality built into it – say, the ability to receive and transmit MIDI data – and you create a new class based on this parent class, your subclass – the child – will possess all the capabilities of the parent plus whatever new features you’ve endowed it with (perhaps the ability to apply some special processing to that MIDI data.) Alright, we’re generalizing somewhat but that’s basically the gist of it.

This greatly simplifies what would otherwise be an overwhelming process: by basing your plug-in on an Core Audio SDK class, you get a fully functioning Audio Unit straight out of the box – all you need to do is add your stuff on top of it. And that’s not all: the SDK also generates a graphical user interface, automatically, using whatever control parameters – level, pan, cutoff frequency, squishy factor, you name it – you define for your plug-in.

So, taking a step back, we have an SDK that defines a collection of abstract Audio Unit types, each inheriting its particular behavior through a chain of parent classes. Further, each of these abstract types carries a 4-character ‘type’ code which identifies the plug-in type to host applications. And each custom plug-in we build from one of these abstract types gets a unique 4-character ‘subtype’ code, which acts as a signature identifying the plug-in itself. The ‘subtype’ code is provided by you, as developer, when the plug-in is built, but the ‘type’ codes are pre-defined by Apple. You’ll find a complete list of available types in the Audio Unit Programming Guide; the ones we want to mention here are:

- Effect units (class name: AUEffectBase, type code: aufx)

- Instrument units (class name: AUInstrumentBase, type code: aumu)

- Music Effect units (class name: AUMIDIEffectBase, type code: aumf)

Effect units process external audio. Instrument units (aka ‘virtual instruments’) receive incoming MIDI note data and use it to trigger internally-generated sounds. Music Effect units combine features of both. All of these classes inherit from other base classes – such as AUBase – which provide more generalized, lower-level functions.

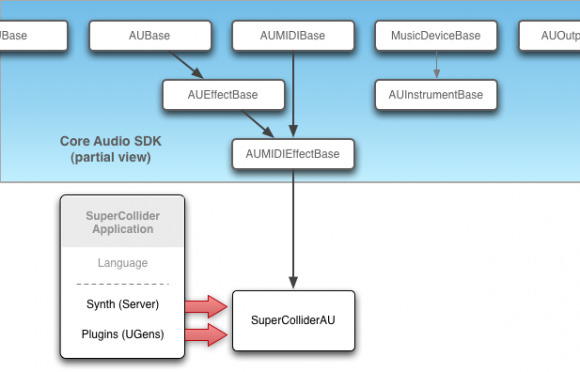

So there’s a kind of genealogy at play, and for AUMIDIEffectBase – the class we’re most interested in, because it is the parent of SuperColliderAU – that genealogy goes something like this: AUBase provides basic functionality common to all devices that process incoming audio; AUEffectBase is a child of AUBase, thereby inheriting that functionality but adding to it the ability to process external audio; finally, AUMIDIEffectBase is a child of AUEffectBase – it can do all the same stuff – but it is also a child of AUMIDIBase, which provides it with MIDI control capabilities.

Here’s our version of the Apple side of the family tree:

Notice that AUMIDIEffectBase does not inherit from AUInstrumentBase – they’re two different animals inhabiting different branches of the tree. We’ll get back to this, and why it matters, shortly.

Now, let’s take a deep breath and move on over to SuperCollider.

SuperCollider

If you’re not already familiar with it, SuperCollider is an object-oriented programming environment optimized for real-time audio processing. It was developed by James McCartney, first as a commercial application but later released (under the GNU General Public License ((This means, of course, that any plug-ins or applications you create with SuperCollider or SuperColliderAU need to comply with the terms of the GPL. Everything you need to know can be found here and here.))) to the open source community. It has since been ported to Linux and Windows, is very much under active development and boasts a thriving global user base. Oh yes, and it’s free.

We won’t go into great detail here about SuperCollider as that would quickly take things outside the scope of the present discussion. Besides, the Wikipedia link above should fill you in nicely. (You may also want to have a look at Wikipedia’s Comparison of Audio Synthesis Environments to get a feel for how SuperCollider lines up with other audio processing environments.)

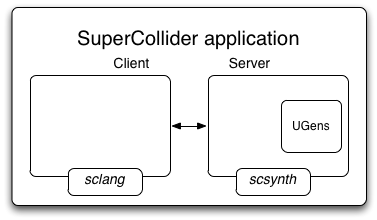

We will, however, attempt to stoke your interest by mentioning just a few of the features that make SuperCollider unique: its client/server architecture; native support for Open Sound Control (OSC); the ability of the client and server to communicate, using UDP and TCP, over a network; the ability to dynamically re-route audio processes while they are running, the ability to create standalone applications, and oh yes: the ability of the audio server to respond to any OSC-capable client, not just the SuperCollider language (that’s right: the audio server can be controlled by other programming languages like Python or Ruby and other audio applications such as Max/MSP.)

But let’s go back to that architecture for a moment. SuperCollider is actually two things: a client, sclang, that sends audio-producing messages and a server, scsynth, that responds to those messages by producing sound. When the server starts up it loads a library of DSP plug-ins – filters, signal generators, delay lines, reverbs, nearly 300 in all – called UGens, which form the processing backbone of the scsynth audio engine. Borrowing loosely from the SuperCollider Help documentation, we might visualize it as something like this:

Now, since that server is optimized for real time audio – and it is designed with an autonomous kinda slant in mind – wouldn’t it be something if you could embed it in an audio plug-in?

SuperColliderAU

Well, that’s exactly what SuperColliderAU does: it is an Audio Unit – built using the Core Audio SDK – and what it does is permit us to run a copy of scsynth (audio server + UGens) as a plug-in within a host application. Extending our previous genealogy chart to include SuperCollider and SuperColliderAU, we might visualize the relationship as looking something like this:

Note once again that AUMIDIEffectBase (and, by extension, SuperColliderAU) does not inherit through the AUInstrumentBase branch of the tree. Here’s the thing: we can use SuperColliderAU to create audio-generating plug-ins – devices that produce their own sounds as opposed to those that simply process external audio streams. We can also trigger those generated sounds and synchronize them to the host’s tempo clock. And, as mentioned earlier, we can exercise real-time control over parameters using MIDI.

What we cannot do – out of the box – is build virtual instruments; that is, devices that produce sound in the context of incoming MIDI notes. That functionality is provided through the MusicDeviceBase -> AUInstrumentBase chain, and we’re simply not hooked into it. You’ll see this fact – no virtual instruments – referred to quite often in scattered bits of documentation and forum comments. Now you know why.

Now, internally, the SuperColliderAU.component ‘file’ is not a file at all, it is a directory of files – as are, in fact, all Audio Units (don’t take my word for it, find an Audio Unit in your Plug-Ins folder, right-click on it and select “View Package Content.”)

In the case of SuperColliderAU, that component directory contains: a compiled copy of scsynth; a plug-ins folder housing the full library of UGens; a .plist file for configuring the server (with default settings for network port number, processing block size, stuff like that); an empty SynthDef folder; and a few resource-type support files.

This arrangement allows us to leverage the full power of SuperCollider within a host environment: we can now send audio-producing messages from SuperCollider (using sclang) to the embedded scsynth audio server, feed its output into other audio plug-ins within the host for further processing (if we so chose), record the result, mix it with other audio tracks and chop, slice, bounce ’til the cows come home.

But that’s not all. SuperColliderAU also allows us to hard-code our audio-producing instructions into a SynthDef file and stick that into a copy of the component (that’s what that empty SynthDef folder is for). In this way, we effectively create a new, customized plug-in. To use this method, you need to be comfortable enough with SuperCollider to write a SynthDef file, save it to the SCAUDK folder (located inside the SuperColliderAU folder) and run the makecustomplugin Unix executable from Terminal.

OK, so writing SynthDefs and working the command line are not exactly items 1 and 2 on the SuperCollider learning curve. Perhaps that’s why Gerard Roma also created a SuperCollider extension (aka, a “Quark”) to handle the grunt work for us.

AudioUnitBuilder

AudioUnitBuilder is (for all intents and purposes) a SuperCollider plug-in, designed to simplify the process of creating standalone Audio Units with SuperColliderAU. Once it has been installed and activated using SuperCollider’s built-in Quark packaging system, the Builder can be called from SCLang just like any other class. Installation includes source code, a help file and a pair of working examples.

In essence, everything that AudioUnitBuilder does, it does by manipulating a copy of SuperColliderAU: working from a plug-in definition that we provide, it creates a new copy of the SuperColliderAU component; renames it according to our definition; writes a pair of .plist configuration files for the new plug-in’s server and whatever subset of UGens our DSP requires; creates a SynthDef file (again, according to our definition); builds the necessary resources; bundles the whole thing into a .component ‘file’; and saves it to our default plug-in directory.

So what goes into this plug-in definition? Jumping back for a moment to our discussion of Audio Units, a significant chunk of the work a developer puts into building an Audio Unit goes to setting up real-time parameters – the plug-in’s user controls – and defining whatever DSP processes the plug-in is intended to perform. AudioUnitBuilder simply provides us with a shortcut for feeding this stuff into a SynthDef file, then it builds the plug-in for us. There are five pieces of information we need to give it:

- name (the name of the plug-in)

- specs (a collection describing the plug-in’s user controls)

- componentType (the appropriate Apple-defined 4 char code to identify the plug-in’s type)

- componentSubtype (a unique 4 char identifier code to identify the plug-in itself – ‘unique’ meaning ‘unique among installed plug-ins created with SuperColliderAU)

- func (the DSP the plug-in will perform)

The simplest and most direct way to do this is to save one of the example files under a new name and use it as a template. When we compile this file in SuperCollider – and don’t worry, compiling is as simple as selecting everything in the template and hitting ENTER – AudioUnitBuilder responds by generating a new audio unit component (using a name we provide) and placing it in the default plug-in folder (usually ~/Library/Audio/Plug-Ins/Components).

Of course, because AudioUnitBuilder presents us with a ‘shortcut’ for defining plug-ins, there are some limitations to what it can do. We’ve already noted we cannot (yet) create virtual instruments with SCAU. Additionally, there are no built-in mechanisms for creating drop down menus, factory presets, user presets, check boxes and other generic UI features normally associated with Audio Unit development.

* * *

And there you have it. Now that we’ve got the lay of the land, we’re ready jump into building our first audio effect plug-in. And build we will in our next installment.

The first thing you need to do, of course, is download the latest stable version of SuperCollider as well as a Apple’s Xcode IDE. You’ll also want to download, if you haven’t already, a working copy of the [Download not found]. Then install SuperCollider and Xcode onto your system, place the SuperColliderAU component in your ~/Library/Audio/Plug-Ins/Components folder and head over to Part 2.

Great article! Waiting with bated breath for Part 2.

Hi!

Thank you very much for this great article. Can’t wait to read part 2.

I am into audio coding and every day I see there are so much more ways to prototype/develop audio algorithms, plug-ins and music performance interfaces. Thanks again for sharing this valuable article about building AU Plugins using Super Collider.

Kind regards,

Amir

Great article!